打开微信,使用扫一扫进入页面后,点击右上角菜单,

点击“发送给朋友”或“分享到朋友圈”完成分享

#include "cnrt.h"

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include"cnpy.h"

#include<complex>

#include<cstdlib>

#include<iostream>

#include<map>

#include<string>

#include <opencv/cv.h>

#include <opencv/highgui.h>

using namespace cv;

using namespace std;

void cnpy2opencv_3d(std::string const& data_fname, cv::Mat& out)

{

// Load the data from file

cnpy::NpyArray npy_data = cnpy::npy_load(data_fname);

// Get pointer to data

float* ptr = npy_data.data<float>();

// Get the shape of data

int dim_1 = npy_data.shape[0];

int dim_2 = npy_data.shape[1];

int dim_3 = npy_data.shape[2];

int size[3] = { dim_1, dim_2, dim_3 };

out = cv::Mat(3, size, CV_32F, ptr).clone();

ptr+=(dim_2*dim_1*3);

}

int offline_test(const char *name) {

// This example is used for MLU270 and MLU220. You need to

// choose the corresponding offline model.

// when generating an offline model, u need cnml and cnrt both

// when running an offline model, u need cnrt only

cnrtInit(0);

// prepare model name

char fname[100] = "../offline/";

// The name parameter represents the name of the offline model file.

// It is also the name of a function in the offline model file.

strcat(fname, name);

strcat(fname, ".cambricon");

printf("load file: %s\n", fname);

// load model

cnrtModel_t model;

cnrtLoadModel(&model, fname);

cnrtDev_t dev;

cnrtGetDeviceHandle(&dev, 0);

cnrtSetCurrentDevice(dev);

// get model total memory

int64_t totalMem;

cnrtGetModelMemUsed(model, &totalMem);

printf("total memory used: %ld Bytes\n", totalMem);

// get model parallelism

int model_parallelism;

cnrtQueryModelParallelism(model, &model_parallelism);

printf("model parallelism: %d.\n", model_parallelism);

int func_num = 0;

cnrtGetFunctionNumber(model, &func_num);

printf("model function number is %d\n", func_num);

// load extract function

cnrtFunction_t function;

cnrtCreateFunction(&function);

cnrtExtractFunction(&function, model, name);

int inputNum, outputNum;

int64_t *inputSizeS, *outputSizeS;

cnrtGetInputDataSize(&inputSizeS, &inputNum, function);

cnrtGetOutputDataSize(&outputSizeS, &outputNum, function);

// prepare data on cpu

void **inputCpuPtrS = (void **)malloc(inputNum * sizeof(void *));

void **outputCpuPtrS = (void **)malloc(outputNum * sizeof(void *));

int input_idx=0;

int output_idx=0;

unsigned char *ptr=(unsigned char *)inputCpuPtrS[input_idx];

cv::Mat test_data;

string string_i;

string image_path = "/workspace/astro/dataset_npy/img";

string new_image_path;

// allocate I/O data memory on MLU

void **inputMluPtrS = (void **)malloc(inputNum * sizeof(void *));

void **outputMluPtrS = (void **)malloc(outputNum * sizeof(void *));

// prepare input buffer

for (int i = 0; i < inputNum; i++) {

// converts data format when using new interface model

inputCpuPtrS[i] = malloc(inputSizeS[i]);

string_i = to_string(i);

new_image_path = image_path.append(string_i);

image_path = "/workspace/astro/dataset_npy/img";

new_image_path = new_image_path.append(".npy");

cnpy2opencv_3d(new_image_path, test_data);

// malloc mlu memory

cnrtMalloc(&(inputMluPtrS[i]), inputSizeS[i]);

cnrtMemcpy(inputMluPtrS[i], inputCpuPtrS[i], inputSizeS[i], CNRT_MEM_TRANS_DIR_HOST2DEV);

}

// prepare output buffer

for (int i = 0; i < outputNum; i++) {

outputCpuPtrS[i] = malloc(outputSizeS[i]);

// malloc mlu memory

cnrtMalloc(&(outputMluPtrS[i]), outputSizeS[i]);

}

// prepare parameters for cnrtInvokeRuntimeContext

void **param = (void **)malloc(sizeof(void *) * (inputNum + outputNum));

for (int i = 0; i < inputNum; ++i) {

param[i] = inputMluPtrS[i];

}

for (int i = 0; i < outputNum; ++i) {

param[inputNum + i] = outputMluPtrS[i];

}

// setup runtime ctx

cnrtRuntimeContext_t ctx;

cnrtCreateRuntimeContext(&ctx, function, NULL);

// bind device

cnrtSetRuntimeContextDeviceId(ctx, 0);

cnrtInitRuntimeContext(ctx, NULL);

// compute offline

cnrtQueue_t queue;

cnrtRuntimeContextCreateQueue(ctx, &queue);

// invoke

cnrtInvokeRuntimeContext(ctx, param, queue, NULL);

// sync

cnrtSyncQueue(queue);

// copy mlu result to cpu

for (int i = 0; i < outputNum; i++) {

cnrtMemcpy(outputCpuPtrS[i], outputMluPtrS[i], outputSizeS[i], CNRT_MEM_TRANS_DIR_DEV2HOST);

}

// free memory space

for (int i = 0; i < inputNum; i++) {

free(inputCpuPtrS[i]);

cnrtFree(inputMluPtrS[i]);

}

for (int i = 0; i < outputNum; i++) {

free(outputCpuPtrS[i]);

cnrtFree(outputMluPtrS[i]);

}

free(inputCpuPtrS);

free(outputCpuPtrS);

free(param);

cnrtDestroyQueue(queue);

cnrtDestroyRuntimeContext(ctx);

cnrtDestroyFunction(function);

cnrtUnloadModel(model);

cnrtDestroy();

return 0;

}

int main() {

printf("offline test\n");

offline_test("q_model");

return 0;

}

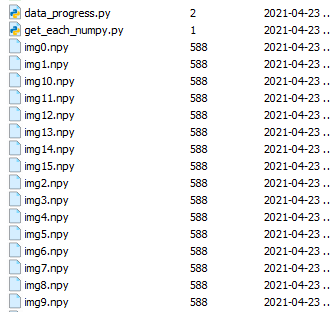

我保存的离线文件.cambricon文件,在上一级目录的offline文件夹下。输入数据在上级目录的get_numpy文件夹下,因为是.npy格式的,我是先转到opencv的mat中(每一个npy文件是224*224*3的),如下图:

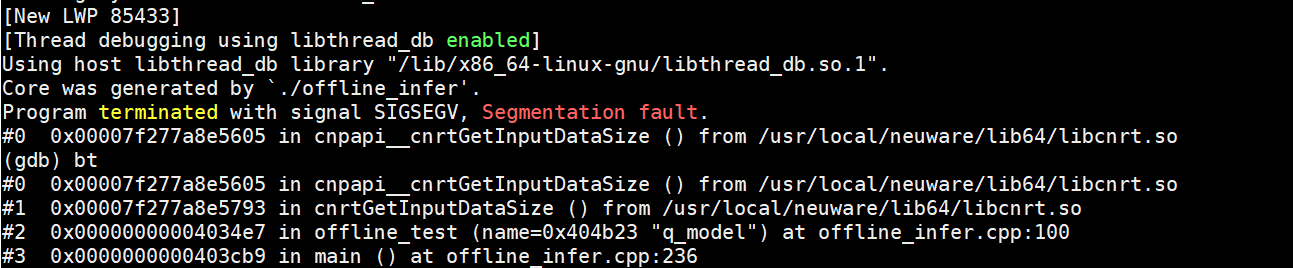

出现了core dump的错误,求各位大佬帮我看看

我怀疑是我数据输入那块出现了问题,因为我看的离线模型示例里边很少有数据输到cpu这步,所以我怀疑我这步写错了

我怀疑是我数据输入那块出现了问题,因为我看的离线模型示例里边很少有数据输到cpu这步,所以我怀疑我这步写错了

谢谢各位大佬的帮助!

热门帖子

精华帖子