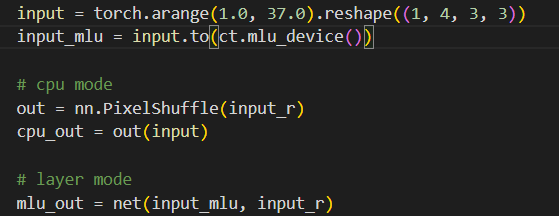

你好,我还有一个问题,就是bangc输出的tensor传回pytorch(libtorch)的时候会经过一次自动的NHWC到NCHW的转换,有什么方法能不进行这一步,或者是在libtorch里让NCHW转回NHWC吗?因为我的bangc已经输出正确结果了,然后经过这个自动转换输出就错了。展开

-

-

-

-

-

-

-

-

-

-

LV.2 #5 幺白幺木回复#4踏雪寻梅回复

尊敬的开发者您好,请提供您使用的板卡型号及SDK版本。另外请问是否方便将您这边验证出错的代码发出,我们分析后给您反馈。

展开Mlu版本信息:cat /proc/driver/cambricon/mlus/0000:17:00.0/information

Device name: MLU270-F4

Device inode path: /dev/cambricon_dev0

Device Major: 234

Device Minor: 0

Driver Version: v4.9.2

MCU Version: v1.1.4

Board Serial Number: SN/182101100027

MLU Firmware Version: 4.9.2

Board CV: 1

IPU Freq: 1000MHz

Interrupt Mode: MSI

Bus Location: 17_0_0

Bus Type: PCIE

LnkCap: Speed 8.0GT/s, Width x16

Region 0: Memory at 80000000 [size=256M]

Region 2: Memory at 78000000 [size=64M]

Region 4: Memory at 7c000000 [size=64M]

验证代码为加粗加大的cout<<input<<endl

#include "aten/operators/cnml/internal/cnml_internal.h"#include "iostream"

using namespace std;

namespace torch_mlu {

namespace cnml {

namespace ops {

at::Tensor cnml_pixelshuffle_internal(const at::Tensor& input, const int64_t input_r) {

auto options =

at::TensorOptions(c10::ScalarType::Float).device(at::DeviceType::MLU);

auto input_size = input.sizes().vec();

int r = input_r;

std::vector<int64_t> output_shape(4);

output_shape[0] = input_size[0];

output_shape[1] = input_size[1] / (r * r);

output_shape[2] = input_size[2] * r;

output_shape[3] = input_size[3] * r;

auto output = at::empty(output_shape, input.options());

// prepare input cnml tensor

cnmlTensor_t input_cnml_tensor[1];

auto* input_impl = getMluTensorImpl(input);

input_cnml_tensor[0] = input_impl->CreateCnmlTensor(CNML_TENSOR, toCnmlDataType(input.dtype()));

// prepare output cnml tensor

cnmlTensor_t output_cnml_tensor[1];

auto* output_impl = getMluTensorImpl(output);

output_cnml_tensor[0] = output_impl->CreateCnmlTensor(CNML_TENSOR, toCnmlDataType(output.dtype()));

// End the execution flow if not MLU device

CHECK_MLU_DEVICE(output);

int total_number = input.numel();

int dtype = 0;

switch (toCnmlDataType(input.dtype())) {

case CNML_DATA_FLOAT32:

dtype = 4;

break;

case CNML_DATA_FLOAT16:

dtype = 2;

break;

default:

CNLOG(ERROR) << "Pixelshuffle op only supports FLOAT32 & FLOAT16";

}

// for(int i = 0; i < total_number; i++)

// std::cout<<*((float*)(input+i))<<std::endl;

cout<<input<<endl

// setup operator

cnmlBaseOp_t pixelshuffle_op;

cnmlPluginPixelshuffleOpParam_t param;

TORCH_CNML_CHECK(cnmlCreatePluginPixelshuffleOpParam(¶m,

total_number,

input_size[0],

input_size[2],

input_size[3],

input_size[1],

input_r,

dtype,

GET_CORE_VERSION));

TORCH_CNML_CHECK(cnmlCreatePluginPixelshuffleOp(&pixelshuffle_op,

param,

input_cnml_tensor,

output_cnml_tensor));

// return to JIT if running mode is fuse

CHECK_RETURN_TO_FUSE(pixelshuffle_op, output);

auto queue = getCurQueue();

// compile op

TORCH_CNML_CHECK(cnmlCompileBaseOp(pixelshuffle_op, GET_CORE_VERSION, GET_CORE_NUMBER));

// compute operator

void* input_ptrs[1];

input_ptrs[0] = input_impl->raw_mutable_data();

cout<<input_ptrs[0]<<endl;

void* output_ptrs[1];

output_ptrs[0] = output_impl->raw_mutable_data();

TORCH_CNML_CHECK(cnmlComputePluginPixelshuffleOpForward(pixelshuffle_op,

input_ptrs,

1,

output_ptrs,

1,

queue));

syncQueue(queue);

// destroy params and ops

if (param != nullptr) {

TORCH_CNML_CHECK(cnmlDestroyPluginPixelshuffleOpParam(¶m));

param = nullptr;

}

if (pixelshuffle_op != nullptr) {

TORCH_CNML_CHECK(cnmlDestroyBaseOp(&pixelshuffle_op));

pixelshuffle_op = nullptr;

}

// std::cout<<output<<std::endl;

// output.print();

return output;

}

} // namespace ops

} // namespace cnml

} // namespace torch_mlu

-

-

-

-

请登录后评论