打开微信,使用扫一扫进入页面后,点击右上角菜单,

点击“发送给朋友”或“分享到朋友圈”完成分享

1.下载源代码

github地址:https://github.com/Cambricon/CNStream

2.进入到目录

cd /cnstream/samples/detection-demo

3.在后处理postprocess中增加去雾后处理的Dehazepost.cpp

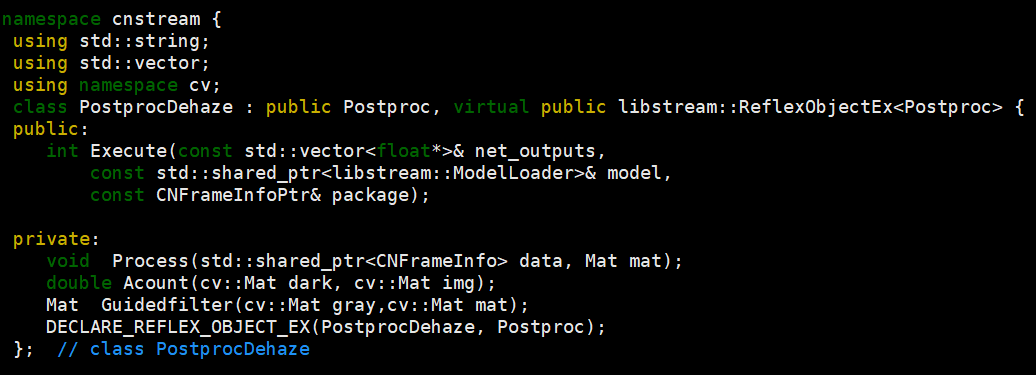

定义自己的类PostprocDehaze继承父类Postproc,并且实现父类的虚函数Execute。(完整代码如下)

int PostprocDehaze::Execute(const std::vector& net_outputs, const std::shared_ptr& model, const CN InfoPtr& package)

#include "postproc.hpp"

#include #include #include #include #include #include #include #include #include #include #include "cnbase/cntypes.h"

namespace cnstream {

using std::string;

using std::vector;

using namespace cv;

class PostprocDehaze : public Postproc, virtual public libstream::ReflexObjectEx {

public:

int Execute(const std::vector& net_outputs,

const std::shared_ptr& model,

const CNFrameInfoPtr& package);

private:

void Process(std::shared_ptr data, Mat mat);

double Acount(cv::Mat dark, cv::Mat img);

Mat Guidedfilter(cv::Mat gray,cv::Mat mat);

DECLARE_REFLEX_OBJECT_EX(PostprocDehaze, Postproc);

}; // class PostprocDehaze

Mat PostprocDehaze::Guidedfilter(cv::Mat img,cv::Mat mat){

Mat gray;

int height = img.rows;

int width = img.cols;

cvtColor(img,gray,CV_BGR2GRAY);

gray.convertTo(gray,CV_32FC1);

for (int i = 0; i<height; i++)

{

for (int j = 0; j<width; j++)

{

gray.at(i, j)=gray.at(i, j)/255.0;

}

}

int r = 60;

double eps = 0.0001;

Mat mean_I;

mean_I.convertTo(mean_I,CV_32FC1);

boxFilter(gray,mean_I,-1,Size(r,r),Point(-1,-1),true,BORDER_DEFAULT);//方框滤波器来模糊一张图片

Mat mean_p ;

mean_p.convertTo(mean_p,CV_32FC1);

boxFilter(mat,mean_p,-1,Size(r,r),Point(-1,-1),true,BORDER_DEFAULT);

Mat mean_Ip;

mean_Ip.convertTo(mean_Ip,CV_32FC1);

Mat GM;

GM.convertTo(GM,CV_32FC1);

GM=gray.mul(mat);

boxFilter(GM,mean_Ip,-1,Size(r,r),Point(-1,-1),true,BORDER_DEFAULT);

Mat cov_Ip;

cov_Ip.convertTo(cov_Ip,CV_32FC1);

Mat MM;

MM.convertTo(MM,CV_32FC1);

MM=mean_I.mul(mean_p);

cov_Ip = mean_Ip - MM;

Mat mean_II;

mean_II.convertTo(mean_II,CV_32FC1);

Mat GG;

GG.convertTo(GG,CV_32FC1);

GG=gray.mul(gray);

boxFilter(GG,mean_II,-1,Size(r,r),Point(-1,-1),true,BORDER_DEFAULT);

Mat var_I;

var_I.convertTo(var_I,CV_32FC1);

Mat II;

II.convertTo(II,CV_32FC1);

II=mean_I.mul(mean_I);

var_I = mean_II - II;

Mat a;

a.convertTo(a,CV_32FC1);

a = cov_Ip/(var_I + eps);

Mat b;

b.convertTo(b,CV_32FC1);

Mat AM;

AM.convertTo(AM,CV_32FC1);

AM=a.mul(mean_I);

b = mean_p - AM;

Mat mean_a ;

mean_a.convertTo(mean_a,CV_32FC1);

boxFilter(a,mean_a,-1,Size(r,r),Point(-1,-1),true,BORDER_DEFAULT);

Mat mean_b ;

mean_b.convertTo(mean_b,CV_32FC1);

boxFilter(b,mean_b,-1,Size(r,r),Point(-1,-1),true,BORDER_DEFAULT);

Mat q;

q.convertTo(q,CV_32FC1);

Mat MG;

MG.convertTo(MG,CV_32FC1);

MG=mean_a.mul(gray);

q = MG + mean_b;

q=cv::max(q,0.1); return q;

}

double PostprocDehaze::Acount(cv::Mat dark, cv::Mat img){

double sum=0; //像素点符合条件A的和

int pointNum = 0; //满足要求的像素点数

double A = 0; //大气光强A

double pix =0; //暗通道图中照亮度的前0.1%范围的像素值

CvScalar pixel; //按图中符合A的点,在雾图中对应的像素

float stretch_p[256], stretch_p1[256], stretch_num[256];

int height = img.rows;

int width = img.cols;

//清空三个数组,初始化填充数组元素为0

memset(stretch_p, 0, sizeof(stretch_p));

memset(stretch_p1, 0, sizeof(stretch_p1));

memset(stretch_num, 0, sizeof(stretch_num));

IplImage tmp = IplImage(dark);

CvArr* arr = (CvArr*)&tmp;

IplImage tmp1 = IplImage(img);

CvArr* arr1 = (CvArr*)&tmp1; for (int i = 0; i<height; i++)

{

for (int j = 0; j<width; j++)

{

double pixel0 = cvGetReal2D(arr, i, j);

int pixel = (int)pixel0;

stretch_num[pixel]++;

}

} //统计各个灰度级出现的概率

for (int i = 0; i<256; i++)

{

stretch_p[i] = stretch_num[i] / (height*width);

} //统计各个灰度级的概率,从暗通道图中按照亮度的大小取前0.1%的像素,pix为分界点

for (int i = 0; i<256; i++)

{

for (int j = 0; j 0.999)

{

pix = (double)i;

i = 256;

break;

}

}

}

for (int i = 0; i< height; i++)

{

for (int j = 0; j pix )

{

pixel = cvGet2D(arr1, i, j);

pointNum++;

sum += pixel.val[0];

sum += pixel.val[1];

sum += pixel.val[2];

}

}

}

A = sum / (3 * pointNum);

A = A/255.0; if (A > 220.0)

{

A = 220.0;

}

printf("float: %f\n", A);

return A;

}

int PostprocDehaze::Execute(const std::vector& net_outputs,

const std::shared_ptr& model,

const CNFrameInfoPtr& package) {

if (net_outputs.size() != 1) {

std::cerr <output_shapes()[0];

auto data = net_outputs[0];

int height = sp.h();

int width = sp.w();

Mat mat = Mat(height,width,CV_32FC1, data);//网络输出透射图

std::cout<<"mat height::"<<height<<width<<std::endl;

Process(package,mat);

return 0;

}

void PostprocDehaze::Process(std::shared_ptr data, Mat mat) {

int width = mat.cols;

int height = mat.rows;

std::cout<<"data height::"<<height<<width<frame.ImageBGR();

Mat q, Dehaze;

double A = 0.0;//大气光值

imwrite("img.jpg",img); //得到原图

cv::resize(img, img, Size(width,height));//将原图大小调整为和网络输出大小相同

vector rgb;

split(img,rgb);

Mat dc=cv::min(cv::min( rgb.at(0),rgb.at(1)),rgb.at(2));//暗通道实际上是在rgb三个通道中取最小值组成灰度图

Mat kernel = getStructuringElement(cv::MORPH_RECT,Size(15,15));

Mat dark;

erode(dc,dark,kernel);//腐蚀就是最小值滤波

A = Acount(dark,img);//1.从暗通道图中按照亮度大小取前0.1%的像素。2.在这些位置中,在原始图像中寻找对应具有最高亮度点的值,作为A值。

q = Guidedfilter(img,mat);//引导滤波

Dehaze = Mat(height,width,CV_32FC3);

img.convertTo(img,CV_32FC3); //将得到的原图进行归一化以便后续计算

for (int i = 0; i<height; i++)

{ for (int j = 0; j<width; j++)

{

img.at(i, j)[0]=img.at(i, j)[0]/255.0;

img.at(i, j)[1]=img.at(i, j)[1]/255.0;

img.at(i, j)[2]=img.at(i, j)[2]/255.0;

}

}

for (int i = 0; i<height; i++)

{

for (int j = 0; j<width; j++)

{

Dehaze.at(i, j)[0]=(((img.at(i, j)[0]-A)/q.at(i, j))+ A)*255;

Dehaze.at(i, j)[1]=(((img.at(i, j)[1]-A)/q.at(i, j))+ A)*255;

Dehaze.at(i, j)[2]=(((img.at(i, j)[2]-A)/q.at(i, j))+ A)*255;

}

}

static int i = 0;

std::string img_name = std::to_string(i++) + ".jpg";

imwrite(img_name, Dehaze);

}

IMPLEMENT_REFLEX_OBJECT_EX(PostprocDehaze, Postproc)

} // namespacenet_outputs中可以获取网络输出,package中可以得到原图,流程如下:

a

Mat mat = Mat(height,width,CV_32FC1, data)

得到网络输出透射图t(x)

img = cv::Mat(data-> .height*3/2, data-> .width, CV_8UC1, img_data, data-> .strides[0]);

得到原图

Mat dc=cv::min(cv::min( rgb.at(0),rgb.at(1)),rgb.at(2));

暗通道实际上是在rgb三个通道中取最小值组成灰度图

A = Acount(dark,img);

1.从暗通道图中按照亮度大小取前0.1%的像素。 2.在这些位置中,在原始图像中寻找对应具有最高亮度点的值,作为A值。

q = Guidedfilter(img,mat);

引导滤波

Dehaze.at(i, j)[0]=(((img.at(i, j)[0]-A)/q.at(i, j))+ A)*255;

根据投射图和原图以及大气光值恢复去雾后的图像

4.图片路径

我自己的图片存放在

/detection-demo/test_dehaze/images

需要写一个files.list_image指明图片路径

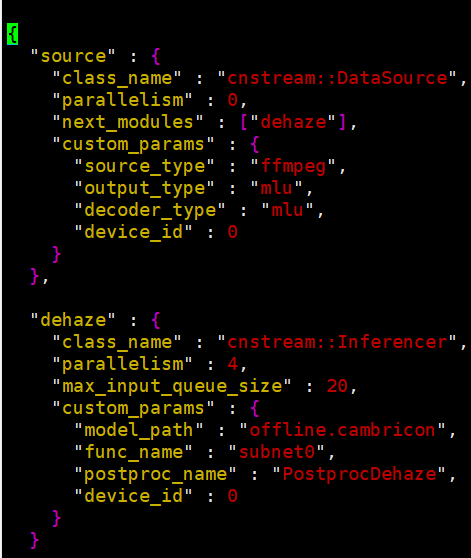

5.改写配置文件dehaze_config.json

(a)next_modules:dehaze

(b)model_path:指明模型路径

(c)postproc_name:指明子类类名

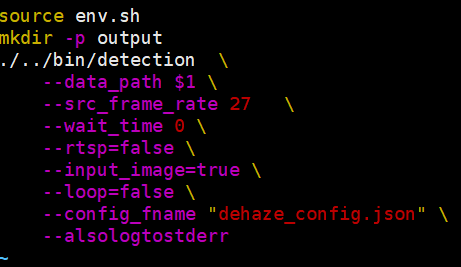

6.运行脚本

图片路径我们将以参数形式传入,当我们输入为图片时要将input_image改为true,同理,若输入为视频则将其改为false

7.编译运行

在目录/cnstream/build下进行

camke .. make -j8

运行

./run_dehaze.sh files.list_image

8.运行结果

我们以imwrite的形式将图片保存下来,视频也是一帧帧保存为图片,后续会再进行改写

热门帖子

精华帖子