打开微信,使用扫一扫进入页面后,点击右上角菜单,

点击“发送给朋友”或“分享到朋友圈”完成分享

进行离线推理时,生成李先模型需要模型转换,遇到了问题

已经完成了原yolov4模型的量化过程,生成了int8,未产生报错

在进行yolov4的pb模型转cambricon模型的过程中出现(执行 ./tensorflow_pb_to_cambricon.sh yolov4 int8 1 1 small MLU220 命令)

F tensorflow/core/kernels/mlu_kernels/mlu_ops/mlu_ op.cc:43][6177] 'reinterpret_cast<cnnlHandle_t>(context->op_device_context() ->stream() ->implementation() ->GpuStreamMemberHack())' Must be non NULL

模型描述文件

model_name: yolov_608.cambricon

session_run{

input_nodes(1):

"Placeholder",2,608,608,3

output_nodes(3):

"concat_9"

"concat_10"

"concat_11"

}

量化使用的batch_size为2,608x608的彩色图片

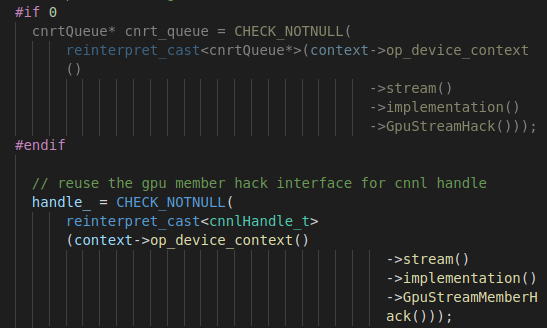

定位源码发现

错误出现在下面那个GpuStreamMemberHack(),应该是他返回了空

查看第二个的GpuStreamMemberHack()说明后,即下面的

See the above comment on GpuStreamHack -- this further breaks abstraction

for Eigen within distbelief, which has strong ties to CUDA or ROCm as a

platform, and a historical attachment to a programming model which takes a

stream-slot rather than a stream-value.

应该是说参照上面的GpuStreamHack,应该是那个未被编译的那部分

Returns the GPU stream associated with this platform's stream implementation.

WARNING: checks that the underlying platform is, in fact, CUDA or ROCm,causing a fatal error if it is not. This hack is made available solely foruse from distbelief code, which temporarily has strong ties to CUDA or ROCm as a platform

应该是说检查平台是否为CUDA或ROCm,但由于是虚拟机因此并没有显卡。

请问这个错误应该怎么解决

热门帖子

精华帖子